How to Secure AI Agents for Enterprise‑Grade AI Security

Key Takeaways

AI agents are increasingly embedded into enterprise workflows, where they autonomously retrieve data, synthesize information, and take action across business systems. As these agents gain access to sensitive data and operate with increasing independence, they introduce new security risks that traditional controls were not designed to manage.

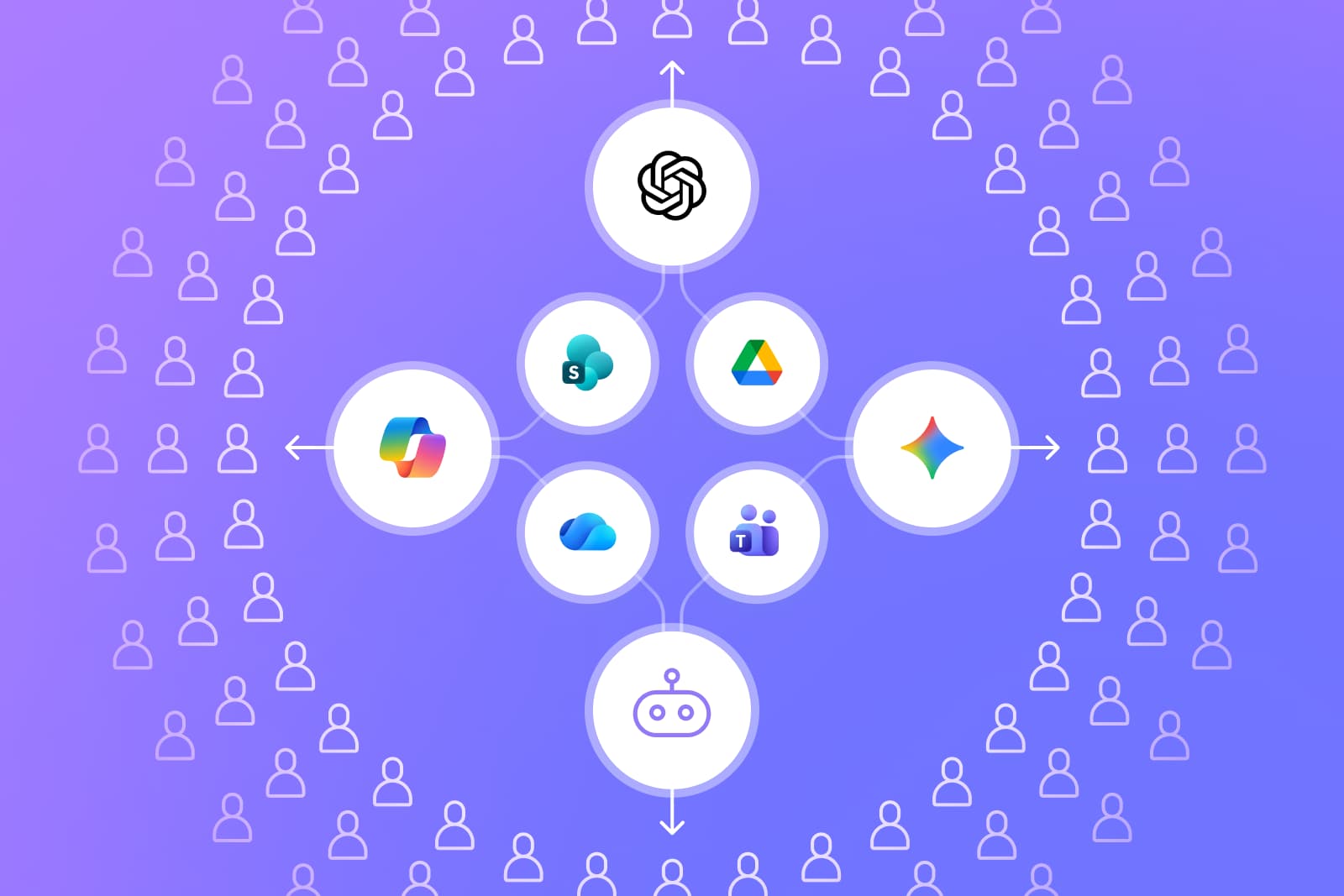

AI agents are built through platforms like ChatGPT Enterprise, Copilot Studio, and Google Gemini. This article explains how to secure AI agents in an enterprise context, focusing on identity, access, data exposure, and operational oversight rather than the internal mechanics of AI models themselves.

What Is AI Agent Security?

AI agent security refers to the set of controls, policies, and oversight mechanisms used to manage how AI agents access data, interact with systems, and take action within an enterprise environment.

In an enterprise context, AI agents act as nonhuman actors that can query files, summarize content across repositories, trigger workflows, and perform tasks on behalf of users or teams. Because these agents often operate across multiple applications and inherit permissions from identities, roles, or integrations, they must be secured like any other privileged actor in the environment.

Why AI Agent Security Is Critical

As AI agents move from passive assistance (the kind of assistance tools like ChatGPT and Gemini offer through their basic chat modes) to active participation in enterprise workflows, the risk profile changes significantly. Security becomes critical because these agents can act independently across systems, data, and processes at scale.

- Autonomous Actions Without Human Oversight: AI agents can execute tasks, trigger workflows, and retrieve information without direct human approval for each action. When agents operate autonomously, errors, misconfigurations, or misuse can propagate quickly, making it difficult to intervene before they impact business operations.

- Expanded Attack Surface and Data Exposure: By design, agents interact with multiple applications, repositories, and services to complete tasks. Each integration, inherited permission, or connected data source expands the attack surface and increases the likelihood that overshared files or legacy access are surfaced and redistributed through agent activity.

- Threats to Privacy, Compliance, and Business Logic: AI agents often touch regulated data, internal policies, and operational logic embedded in workflows. Without proper controls, agent-driven access or summaries can expose sensitive information, violate regulatory requirements, or unintentionally reveal proprietary processes.

- Risk of Unintended or Harmful Decisions: Agents rely on context, permissions, and instructions to act, but they do not inherently understand business intent or risk tolerance. Inadequate guardrails can lead agents to make decisions that are technically valid but operationally harmful, such as acting on incomplete data or combining information in ways that create downstream risk.

Top Security Threats Facing AI Agents

AI agents are exposed to a distinct set of security threats that differ from traditional user-driven AI interactions. These risks emerge from how agents receive instructions, inherit access, and integrate with external tools, making tailored safeguards essential.

- Prompt Injection and Command Manipulation: Attackers can craft inputs that manipulate an agent’s instructions and alter behavior, causing it to bypass intended safeguards or reveal sensitive information. For agents that perform actions based on natural language instructions, manipulated prompts can lead to unintended actions or data exposure without obvious signs of compromise.

- Overly Broad or Excessive Permissions: AI agents frequently inherit permissions from users, roles, or service accounts. When those permissions are too broad or based on legacy access, agents can access and surface data far beyond what is required for their function.

- Model Manipulation and Data Poisoning: Agents that rely on external data sources or continuously updated knowledge bases can be influenced by corrupted or misleading inputs. Even without direct model access, poisoned data can shape agent outputs and decisions in ways that undermine accuracy and trust.

- Identity Spoofing or Unauthorized Control: If agent identities are not clearly defined and protected, attackers may impersonate agents or hijack their credentials. This can allow unauthorized actors to trigger actions or retrieve data while appearing to be a legitimate automated process.

- Supply Chain Vulnerabilities in Tools and Dependencies: AI agents often depend on plugins, APIs, and third-party services to function. Weaknesses or misconfigurations in these dependencies can introduce indirect attack paths, enabling data leakage or unauthorized actions through otherwise trusted integrations.

Core Principles for Securing AI Agents

Securing AI agents requires a consistent set of principles that treat agents as operational actors with real access and capable of creating substantial impact. These principles establish guardrails that limit exposure, enforce accountability, and maintain control as agents operate across enterprise systems.

AI Agent Risk Assessment and Prioritization

As AI agents proliferate across enterprise environments, organizations need a structured way to understand which agents pose the greatest risk and where controls should be applied first. Risk assessment and prioritization ensure security efforts are aligned to business impact instead of being applied uniformly or reactively.

Categorize Agents by Criticality

Not all agents carry the same level of risk. Some agents perform low-impact tasks such as summarization or search, while others can modify records, trigger workflows, or influence business decisions. Worse, some of these agents also have access to your most sensitive data and can perform those actions on it. Categorizing agents by their functional role and potential impact helps teams distinguish between informational agents and those that warrant stricter oversight.

Identify High-Risk Access and Data Touchpoints

Risk increases when agents interact with sensitive repositories, regulated data, or systems of record. Mapping which agents can access critical files, shared folders, or integrated applications helps identify exposure points where existing permissions or legacy access could be amplified through agent activity.

Threat Modeling and Impact Analysis

Once high-risk agents and access paths are identified, teams should assess how those agents could be misused or fail. This includes evaluating likely threat scenarios, potential data exposure, and downstream operational impact if an agent behaves unexpectedly or is compromised.

Prioritize Mitigation Actions

Mitigation efforts should focus first on agents with the highest combination of access breadth and business impact. Prioritization allows security teams to apply tighter controls, monitoring, or remediation where risk is most concentrated, rather than spreading resources thinly across all agents.

Challenges in Securing AI Agents

Efforts to secure AI agents introduce practical challenges that go beyond traditional user or application security. These challenges stem from how agents operate autonomously, span systems, and evolve over time.

- Lack of Visibility Into Agent-Initiated Actions: Agents can initiate queries, retrieve data, or trigger workflows without providing real-time visibility to security teams. This visibility gap makes it difficult to understand what actions agents are taking, which data they are accessing, and whether those actions align with policy.

- Agents Operating Across Multiple Systems and Tools: AI agents rarely operate in isolation. They often span file repositories, collaboration platforms, SaaS applications, and third-party services, creating fragmented control points and making consistent enforcement harder.

- Difficulty Enforcing Least Privilege at Scale: As the number of agents grows, maintaining tightly scoped permissions becomes increasingly complex. Agents frequently inherit access from users, roles, or integrations, making it easy for excessive or outdated permissions to persist unnoticed.

- Policy Drift as Agents Learn and Adapt: Agents may change behavior as workflows evolve, data sources are updated, or instructions are modified. Over time, this can cause agents to operate outside their original risk assumptions unless policies are continuously reassessed.

- Audit and Compliance Gaps for Nonhuman Actors: Many audit and compliance processes are designed around human users. Applying the same rigor to nonhuman agents is challenging, particularly when logs, ownership, or accountability are unclear or inconsistently enforced.

Best Practices for Securing AI Agents

As agent environments grow, security principles must be translated into day-to-day controls. This requires clear, repeatable best practices that teams can apply consistently.

How Opsin Security Protects Your AI Agents And Your Business

Opsin is designed to help enterprises secure AI agents and their business by addressing identity, access, data exposure, and oversight challenges that emerge as agents operate across files, users, and workflows. Its capabilities focus on visibility, risk reduction, and continuous enforcement rather than model-level controls.

- Identity-Aware Protection for AI Agents: Opsin Agent Defense maintains a comprehensive inventory of custom agents and captures critical context (such as identity, ownership, connected data sources, tools, permissions, and instructions) so security teams can clearly see who created each agent and understand the scope of what it can access and execute.

- Intent-Based Agent Classification and Risk Prioritization: Opsin Agent Defense classifies AI agents by intent and context to identify which are most critical to the business and which pose the highest risk. This classification gives security teams clear visibility into high-impact agents that warrant immediate attention. Based on each agent’s risk profile, Opsin provides instructions that enable teams to remediate issues, apply controls, and manage AI agents safely and consistently.

- Real-Time Threat Detection and Alerting: Opsin’s AI Detection & Response continuously monitors AI agent activity to detect risky behavior, data exposure, and policy violations as they occur. Real-time alerts surface high-impact issues early, enabling security teams to respond quickly before agent-driven actions propagate risk across the environment.

- Policy Enforcement at the Agent Level: Opsin maps internal AI governance policies into automated enforcement mechanisms and real-time alerts. This continuous oversight identifies risky AI agent behavior and oversharing patterns across platforms like Copilot and Gemini before they turn into business-impacting data breaches.

- Risk-Based AI Readiness and Posture Assessment: Opsin’s AI Readiness Assessment provides visibility into where enterprise data is overshared before AI agents are deployed. By identifying exposed files, excessive permissions, and high-risk repositories, Opsin helps teams understand agent risk upfront and prioritize remediation based on business impact.

- Continuous Monitoring and Guided Remediation: Opsin continuously monitors how AI agents access and surface enterprise data, identifying oversharing and policy violations in real time. When risk is detected, Opsin guides teams with prioritized, context-rich remediation steps so exposure can be reduced safely without disrupting business operations.

- Auditable Logs and Compliance Reporting: Opsin maintains detailed, auditable records of AI-related activity, including which users are interacting with which agents, autonomous agent runs, and data lineage between agents. This ensures full visibility and accountability across all agent deployments and supports compliance and audit requirements.

Conclusion

As AI agents take on more autonomous roles inside the enterprise, security must evolve beyond user-centric controls. Securing agents requires visibility into identity and access, continuous monitoring of how data is surfaced, and enforcement that prevents existing oversharing from being amplified at scale. With the right guardrails in place, enterprises can deploy AI agents safely.

FAQ

Why does oversharing matter more for AI agents than for humans?

Because agents can systematically surface and redistribute sensitive data at machine speed.

• Agents inherit permissions across systems, amplifying legacy access issues.

• Summaries and responses can unintentionally expose regulated or confidential data.

• Oversharing risk increases when agents combine data from multiple repositories.

• Continuous access reviews matter more than one-time cleanups.

See how oversharing creates real enterprise risk in generative AI environments.

How do you threat-model an AI agent beyond prompt injection?

By analyzing how identity, permissions, tools, and data dependencies interact at runtime.

• Map every external system, plugin, and API the agent can invoke.

• Evaluate worst-case impact if the agent misbehaves or is compromised.

• Test dependency risks, including poisoned data sources and third-party tools.

• Prioritize controls for agents with both high autonomy and high data sensitivity.

Explore advanced AI threat patterns beyond prompts.

When should human-in-the-loop controls be mandatory for agents?

Whenever an agent’s action is irreversible, high-impact, or compliance-sensitive.

• Require approval for write actions, workflow triggers, or external sharing.

• Gate actions affecting regulated data, financial systems, or production environments.

• Log both agent intent and final approved execution for auditability.

• Periodically reassess which actions still require human review as agents evolve.

For deeper guidance on securing autonomous enterprise AI, watch the interview with Oz Wasserman on DM Radio.

How does Opsin help security teams see what AI agents are actually doing?

Opsin provides continuous, identity-aware visibility into agent access, behavior, and risk.

• Maintains a live inventory of agents, owners, permissions, and connected data sources.

• Monitors agent activity across platforms like Copilot and Gemini in real time.

• Detects risky behavior such as oversharing, policy violations, and abnormal access.

• Produces auditable logs for investigations and compliance reviews.

Learn more about Opsin’s AI Detection & Response capabilities.

How does Opsin reduce AI agent risk before agents are even deployed?

By identifying overshared data and excessive permissions that agents would otherwise amplify.

• Assesses file repositories and SaaS platforms for pre-existing exposure.

• Prioritizes remediation based on business impact, not raw file counts.

• Helps teams right-size access before agents inherit it.

• Enables safer AI rollouts without blocking productivity.

See how Opsin’s AI Readiness Assessment prepares enterprises for secure agent deployment.