Why Agentic AI Security Matters for Modern Organizations & Compliance Needs

Key Takeaways

Unlike traditional generative AI tools that respond to individual prompts, agentic AI systems can autonomously plan tasks, invoke tools, access enterprise data, and take actions across business systems on behalf of users. This difference amounts to greater productivity gains, but it also introduces new security and compliance realities that many organizations are unprepared for.

As AI agents inherit permissions, interact with sensitive files, and operate continuously in the background, existing access misconfigurations and overshared data become amplified risks. Passive exposures can quickly propagate across teams and workflows.

This raises urgent questions in regulated organizations, particularly around accountability, auditability, and control. As such, agentic AI security must not be treated as an optional enhancement, but as a foundational requirement in environments running autonomous systems.

What Is Agentic AI Security?

Agentic AI security refers to the controls, governance mechanisms, and monitoring capabilities required to manage AI systems that can act autonomously within enterprise environments. It addresses how agents, such as Gemini, Microsoft Copilot, and ChatGPT Enterprise–based assistants, interact with organizational data, identities, tools, and workflows once they are deployed.

Agentic AI security is concerned with what agents can access, what actions they can take, and how those actions are observed and governed over time. Agents often operate autonomously using inherited user permissions, service accounts, or delegated credentials, allowing them to retrieve files, query systems, trigger workflows, or coordinate tasks across multiple platforms.

Without proper constraints, this autonomy can expose overshared data, extend excessive permissions, and obscure accountability. Effective agentic AI security ensures that autonomous behavior remains aligned with enterprise policies and compliance requirements.

This includes enforcing least-privilege access, maintaining visibility into agent activity, detecting misuse or anomalous behavior, and producing audit-ready evidence that clearly links agent actions back to responsible identities and approved use cases.

Why Agentic AI Security Matters for Enterprises

As agentic AI moves from isolated use cases into key business workflows, its impact extends beyond productivity into operational stability and compliance posture. Understanding why agentic AI security matters requires examining how autonomous behavior affects business systems, regulatory obligations, and the scale at which the ensuing risk can accumulate.

Operational Impact of Autonomous AI on Business Systems

Agentic AI systems operate across enterprise environments in ways that directly affect day-to-day business operations. By acting continuously and independently, agents can retrieve information, update records, trigger workflows, or coordinate tasks across systems such as Microsoft Teams or Trello, without explicit user intervention.

While these actions increase efficiency, they also introduce operational risk when agents act on incomplete context or rely on inherited access that no longer reflects current business intent. Moreover, small configuration issues, such as outdated permissions or poorly scoped access, can scale quickly when agents operate at machine speed.

Errors or unintended actions may likewise propagate across systems before teams are even aware that an issue has occurred. Without the guardrails and visibility enabled by agentic AI security, organizations risk operational disruptions that are difficult to trace back to a single decision or actor.

Regulatory and Compliance Implications of Agentic AI Adoption

From a compliance perspective, agentic AI challenges traditional assumptions about responsibility and oversight. Regulations often require organizations to demonstrate who accessed sensitive data, why access was granted, and how decisions were made.

When autonomous agents act across systems, answering these questions becomes a significant challenge. What makes it even more challenging is that many regulatory frameworks expect consistent enforcement of access controls, audit trails, and demonstrable accountability.

Agent-driven actions that lack proper logging or attribution can prevent compliance teams from producing required evidence for audits or investigations. Accordingly, agentic AI security becomes a prerequisite for meeting regulatory obligations.

Risk Amplification Through Autonomy and Decision-Making

Autonomy fundamentally changes the scale and speed of risk. Unlike user-initiated decisions, agent decisions can rapidly cascade, combining data from multiple sources and executing follow-on actions automatically. This creates a multiplier effect where minor oversights lead to disproportionate exposure.

As decision-making shifts from users to systems, enterprises must account for how agents interpret goals, select actions, and reuse data over time. Without strong security and governance controls, autonomy increases the likelihood that overshared data, excessive permissions, or misaligned objectives translate into sustained and hard-to-contain risk across the organization.

Enterprise Risks Unique to Agentic & Generative AI

Agentic and generative AI introduce risk patterns that differ from traditional application or user-driven activity. These risks partially stem from how AI systems inherit access, operate continuously, and act across enterprise environments with limited direct oversight.

1. Data Oversharing and Misconfiguration Exposure

Agentic AI systems frequently operate on top of existing enterprise file stores, collaboration platforms (e.g., Google Workspace), and knowledge repositories. When those environments contain legacy permissions, broadly shared folders, or misconfigured access controls, agents, including those powered by platforms like Google Gemini, can surface and redistribute information far beyond its intended audience.

Unlike manual access, this exposure does not require a user to deliberately open or share a file. Agents can summarize, combine, and propagate overshared data automatically, increasing the blast radius of long-standing access issues.

2. Copilot, Agents & Internal AI Blind Spots

Enterprises often lack a complete inventory of AI agents, copilots, and custom assistants existing in their environments, including those associated with tools such as Microsoft Copilot and ChatGPT Enterprise.

Business users can create agents or automated workflows that inherit broad permissions and operate continuously, often outside traditional security visibility. These blind spots make it difficult to understand which agents exist, what data they can access, and how they behave over time, increasing the risk of unmanaged exposure and unmonitored activity.

3. Insider and Misuse Behavior Patterns

Agentic AI changes how insider risk manifests. Employees may unintentionally deploy agents with excessive access or reuse them in contexts they were never designed for. In other cases, agents can be deliberately misused to aggregate sensitive information or perform actions that would normally trigger scrutiny if done manually. Because actions are delegated to systems, misuse can be harder to attribute.

4. Compliance and Audit Evidence Gaps

Autonomous agents often act across systems without producing the same level of contextual logging expected from human activity. When agent actions are insufficiently logged or poorly attributed, organizations struggle to demonstrate compliance during audits or investigations.

Gaps in evidence around who authorized an agent, what data it accessed, and why actions occurred can quickly become regulatory liabilities in highly governed environments.

Agentic AI Security Threat Vectors

The following threat vectors illustrate how agentic AI introduces security risks that differ in nature and scale from traditional user-driven activity. Each vector reflects how autonomy, delegated access, and continuous operation can be exploited or fail without adequate controls.

Best Practices for Agentic AI Security

Securing agentic AI requires controls that account for autonomy, continuous operation, and delegated access across enterprise systems. The following best practices focus on limiting unintended behavior, maintaining visibility, and ensuring accountability as agents act on behalf of users and organizations.

Constraining Agent Autonomy

Agent autonomy should be explicitly scoped to defined tasks, datasets, and actions rather than left open-ended. This includes limiting where agents can operate, what decisions they are allowed to make independently, and when human approval is required. Clear boundaries reduce the risk of agents acting on stale context, expanding beyond their intended purpose, or compounding small configuration issues into larger incidents.

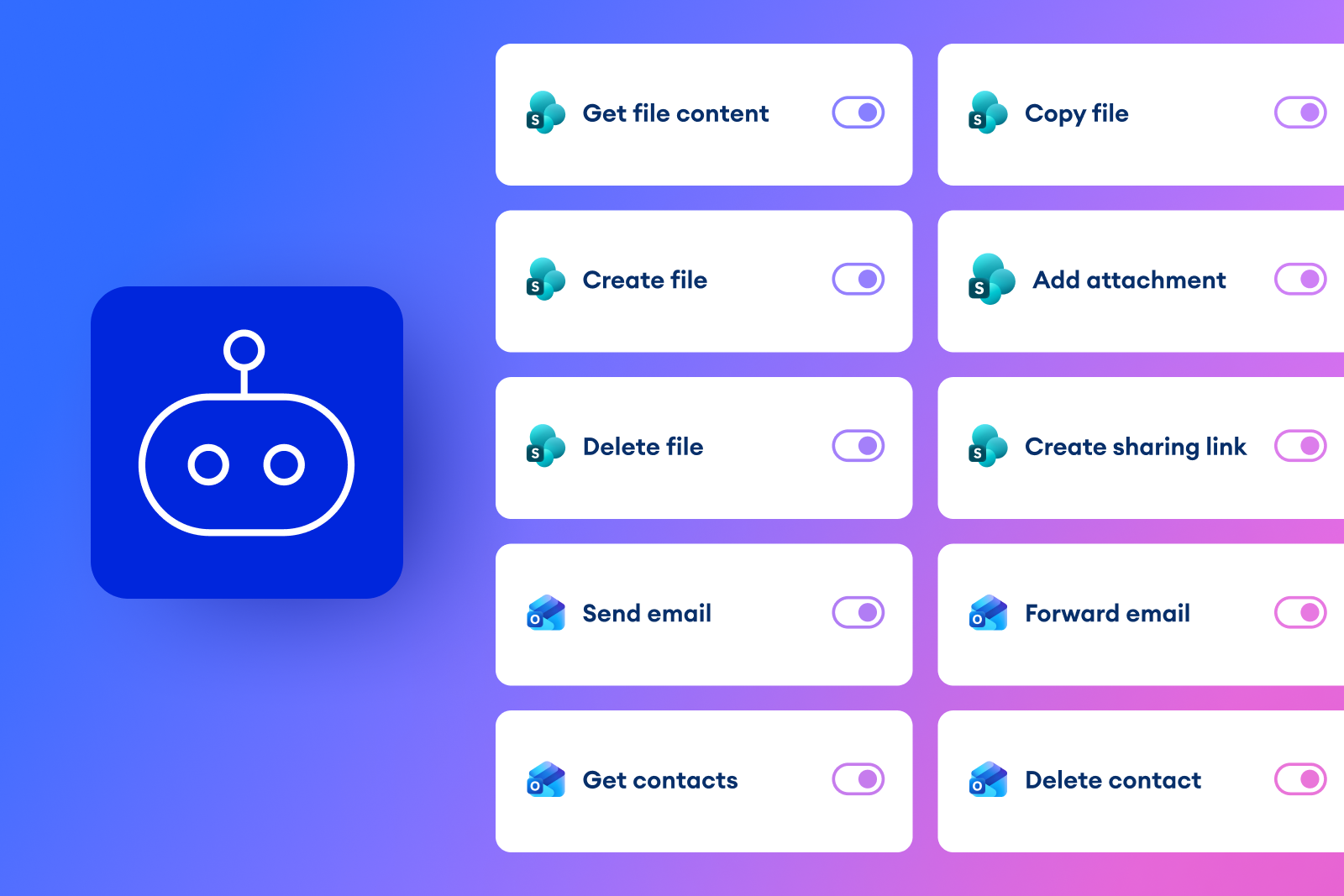

Secure Tool Invocation and Permissioning

Agents should only be allowed to invoke tools, integrations, and APIs that are necessary for their function. Permissions must be tightly scoped, regularly reviewed, and aligned to least-privilege principles. Overly broad tool access increases the likelihood that agents trigger unintended actions or interact with systems in ways that bypass established business controls.

Continuous Monitoring of Agent Activity and Data Access

Because agents operate continuously, security teams need persistent visibility into what agents access and how they behave over time. Monitoring should cover data access patterns, system interactions, and execution frequency across environments. Continuous oversight helps organizations detect drift between intended and actual agent behavior before issues escalate into operational or compliance events.

Detection of Risky, Anomalous, or Policy-Violating Behavior

Agentic environments require the ability to detect deviations from expected behavior. This includes identifying unusual access patterns, unexpected data aggregation, or actions that violate internal policies. Early detection enables organizations to intervene quickly, reducing the window in which automated behavior can propagate risk.

Audit Trails, Logging, and Evidence Collection

All agent actions should be logged with sufficient context to support audits, investigations, and regulatory review. Logs must clearly capture what actions occurred, which identities were used, what data was accessed, and why actions were authorized. Strong evidence collection is essential for demonstrating accountability in environments where decisions are increasingly automated.

Human-in-the-Loop Oversight and Governance Controls

Even in highly autonomous environments, humans remain responsible for outcomes. Organizations should define when agents require approval, how exceptions are handled, and who is accountable for agent behavior. Governance processes that include periodic reviews, ownership assignment, and escalation paths ensure autonomy does not come at the expense of control or compliance.

How Opsin Security Strengthens Agentic AI Security

Translating agentic AI security best practices into day-to-day operations requires visibility, prioritization, and enforceable controls. Opsin Security operationalizes these requirements by helping organizations discover agentic AI usage, assess risk in context, and respond quickly when autonomous behavior creates exposure.

- Continuous Discovery of AI Agents, Copilots, and Internal AI Tools: Opsin continuously catalogs copilots, agents, and AI apps in use, including how they access and share sensitive data, helping eliminate blind spots and hidden data connections.

- Proactive Risk Assessment and Prioritization of AI-Driven Exposure: Opsin identifies where sensitive data is overshared with AI agents, copilots, and autonomous workflows across platforms such as Microsoft 365 and Google Workspace, applying risk scoring and context so teams can prioritize the highest-impact exposures.

- Contextual Monitoring and Detection of Risky Agentic AI Behavior: Opsin provides ongoing protection by monitoring AI interactions and queries in real time to spot sensitive data exposure as it happens, supporting early detection of risky usage patterns and configuration drift.

- Intelligent Alerts With Clear Incident Context and Response Guidance: When risky exposure patterns are detected, Opsin triggers alerts immediately and includes context to help teams understand what is overshared and why. This results in faster and more targeted responses.

- Remediation Workflows That Enable Accountability and Faster Resolution: Opsin provides step-by-step guidance to fix oversharing and data exposure issues at the source and decentralizes remediation via email or instant messaging, helping security, IT, and data owners resolve issues efficiently while maintaining oversight.

Conclusion

Agentic AI changes the enterprise security equation by turning existing access, permissions, and data exposure into continuously executed actions rather than isolated events. Without dedicated security and governance controls, autonomy amplifies operational risk and complicates compliance, auditability, and accountability at scale.

Organizations that treat agentic AI security as a key requirement instead of an afterthought are best positioned to adopt autonomous systems responsibly while maintaining control, trust, and regulatory readiness.

FAQ

Why is agentic AI riskier than traditional generative AI tools?

Agentic AI is riskier because it can take autonomous actions across systems using inherited permissions, not just generate responses to prompts.

• Agents can act continuously without real-time human review.

• Small access misconfigurations can cascade across multiple tools and datasets.

• Errors propagate faster because actions are executed at machine speed.

• Accountability becomes harder when actions aren’t directly user-initiated.

Learn more about AI security blind spots to better understand how overshared data becomes amplified by AI tools.

How does agentic AI change the threat model for identity and access management (IAM)?

Agentic AI shifts IAM risk from episodic user access to persistent, delegated identities acting at scale.

• Service accounts and tokens become high-value attack surfaces.

• Privilege creep is harder to detect when agents reuse legacy access.

• Identity misuse is less visible when actions look “automated.”

• Traditional IAM reviews miss continuous agent behavior.

Learn more about Opsin’s AI detection and response solution.

What new failure modes emerge when agents invoke tools and APIs autonomously?

Autonomous tool invocation introduces systemic risk when permissions and intent drift apart over time.

• Agents may trigger workflows outside their original scope.

• APIs can be misused repeatedly without rate or context checks.

• Misaligned objectives can cause silent data modification.

• Errors persist until behavior, not just access, is monitored.

How does Opsin help organizations discover hidden AI agents and copilots?

Opsin continuously inventories AI agents, copilots, and AI-driven workflows across enterprise environments.

• Identifies agents accessing sensitive data in Microsoft 365 and Google Workspace.

• Reveals inherited permissions and hidden data paths.

• Eliminates blind spots created by user-built or shadow AI tools.

• Maintains an always-current view of AI exposure.

Discover Opsin’s ongoing oversharing protection solution.

How does Opsin support faster remediation without slowing down AI adoption?

Opsin enables decentralized, accountable remediation that fixes risk at the source without blocking productivity.

• Provides clear, context-rich alerts tied to specific AI exposure.

• Guides data owners step-by-step to remediate oversharing.

• Uses email and messaging workflows to speed resolution.

• Preserves audit evidence for compliance and governance teams.

See how this approach works in practice in Opsin’s customer story on securing Copilot deployments.